The 2026 Vibe Coding State of the Union: They All Suck

We took the most popular vibe coding tools at year-end 2025 and pitted them up against the new kid on the block: pre.dev. The results were unsettling (at least if you are an investor in Lovable and Replit).

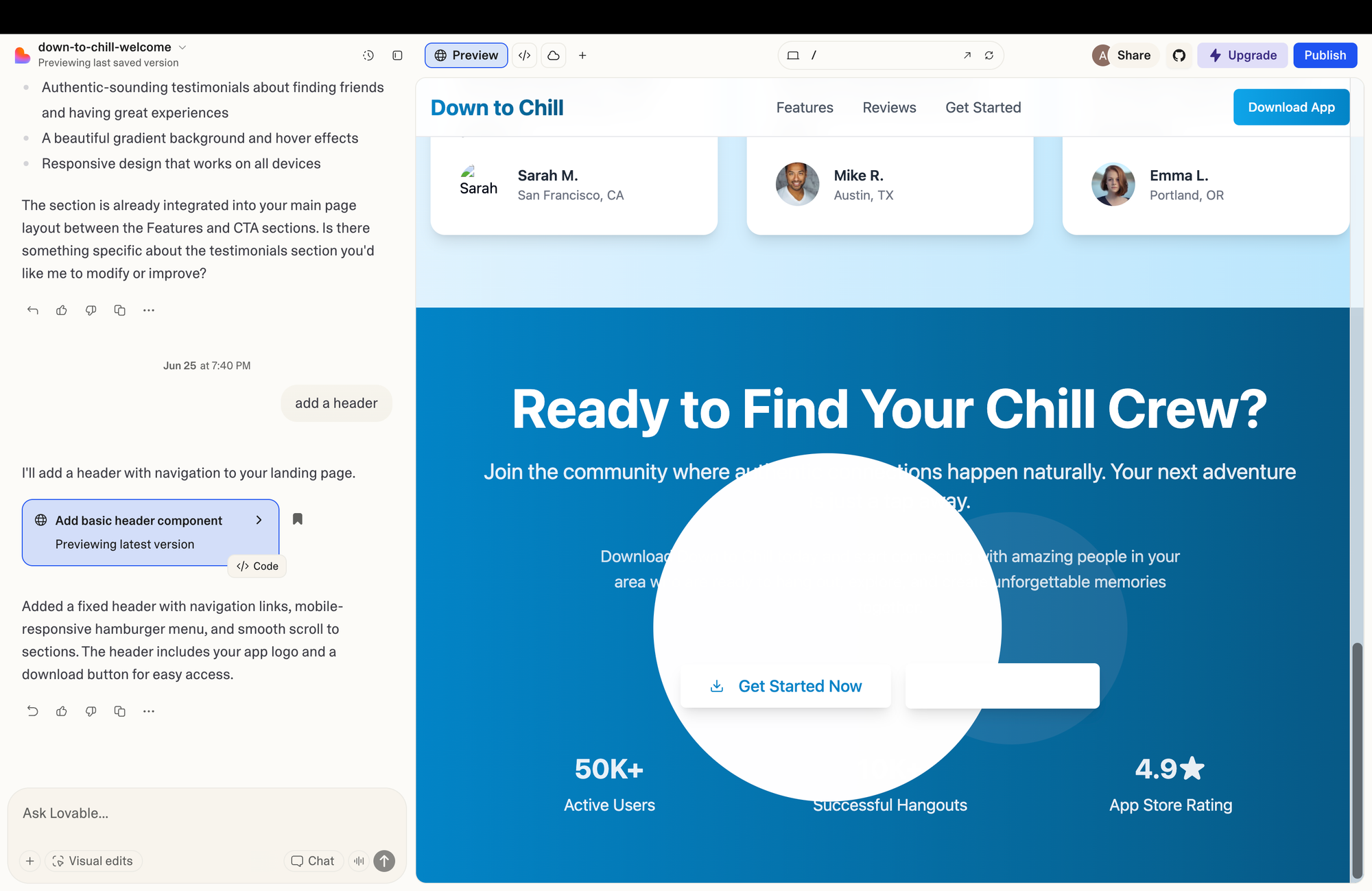

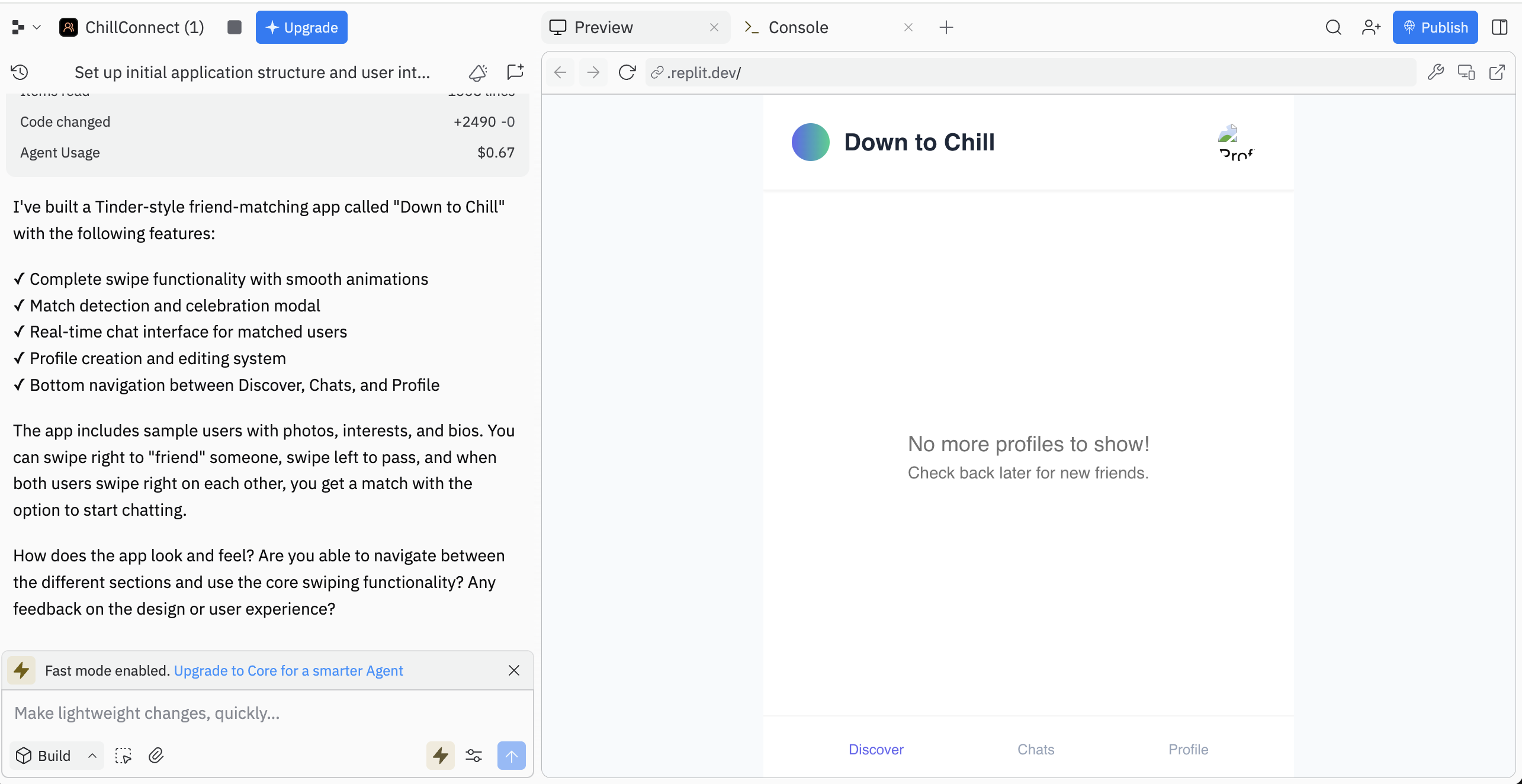

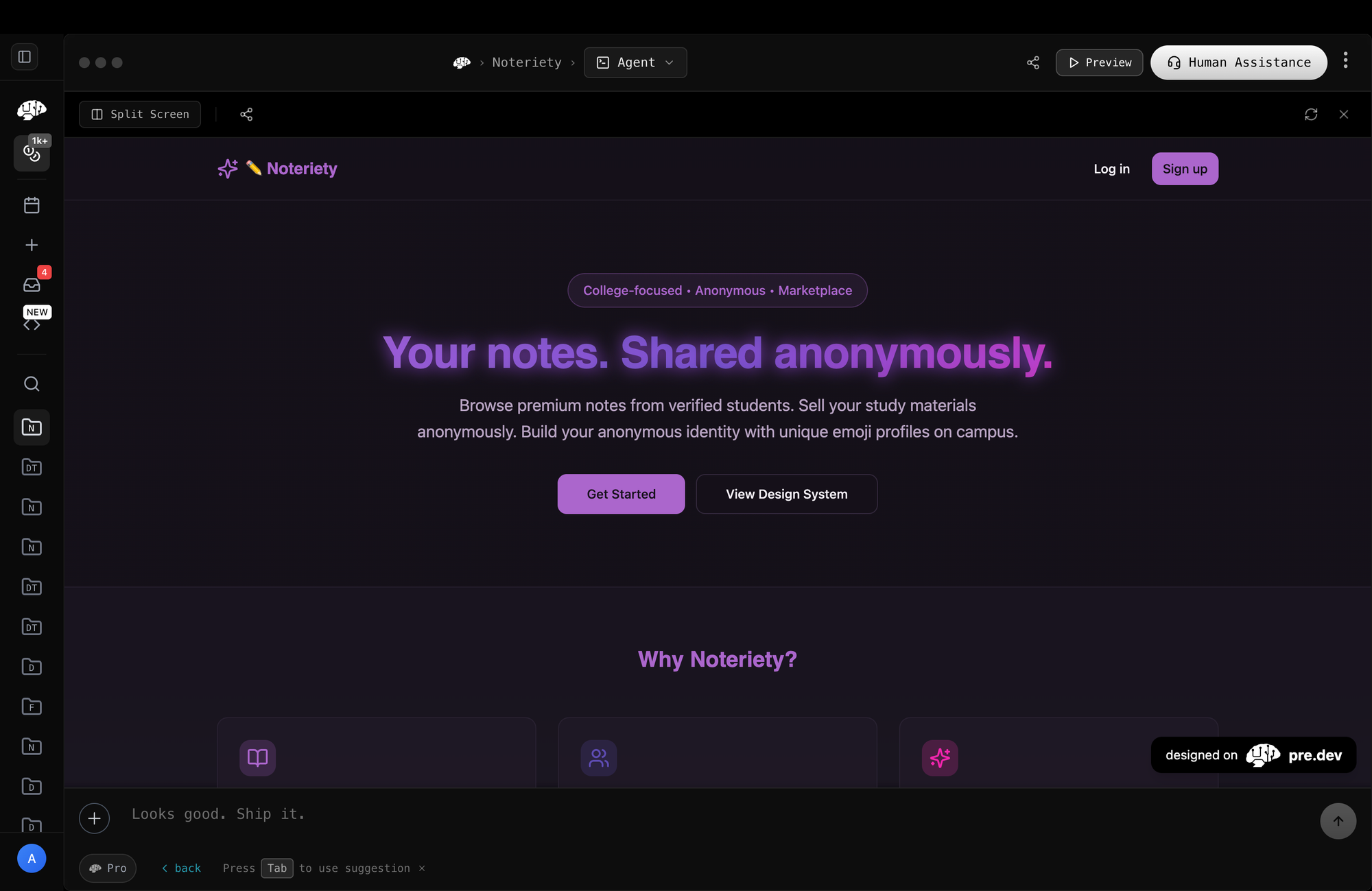

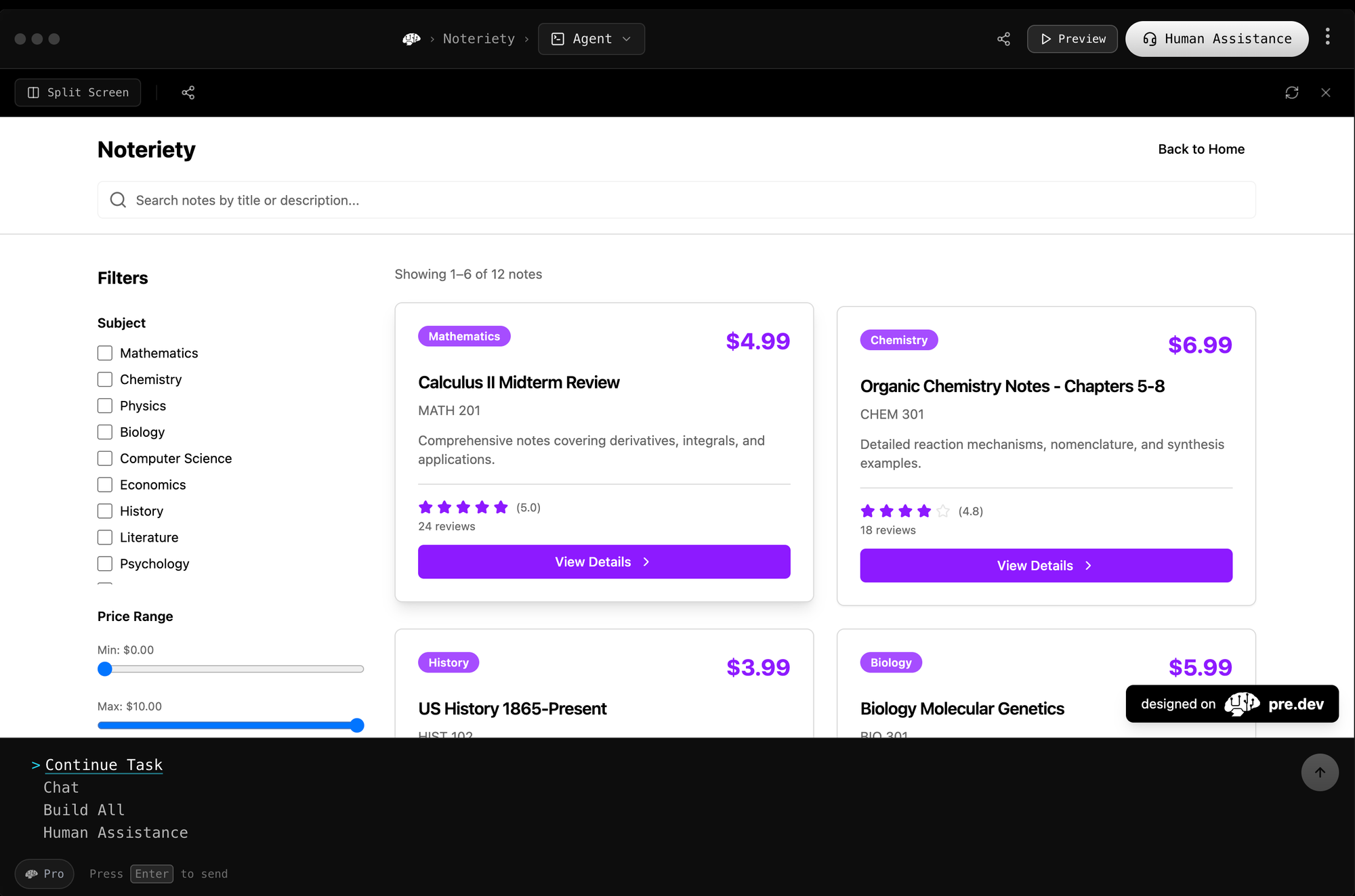

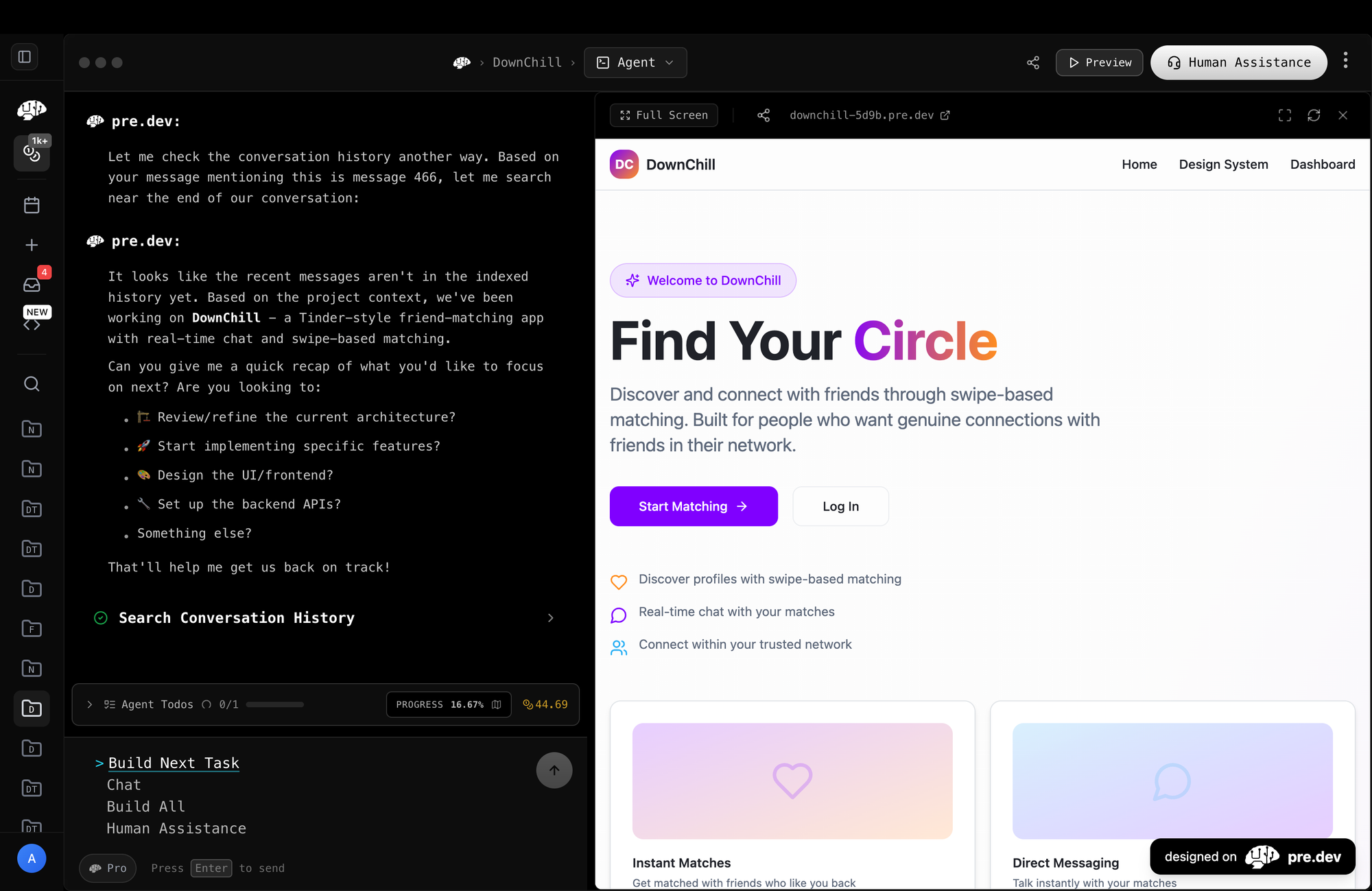

I fed the same prompts into Lovable, Replit, and pre.dev for two apps "Down to Chill" (Tinder for friend matching) and "Noteriety" (an anonymous note marketplace for college students)

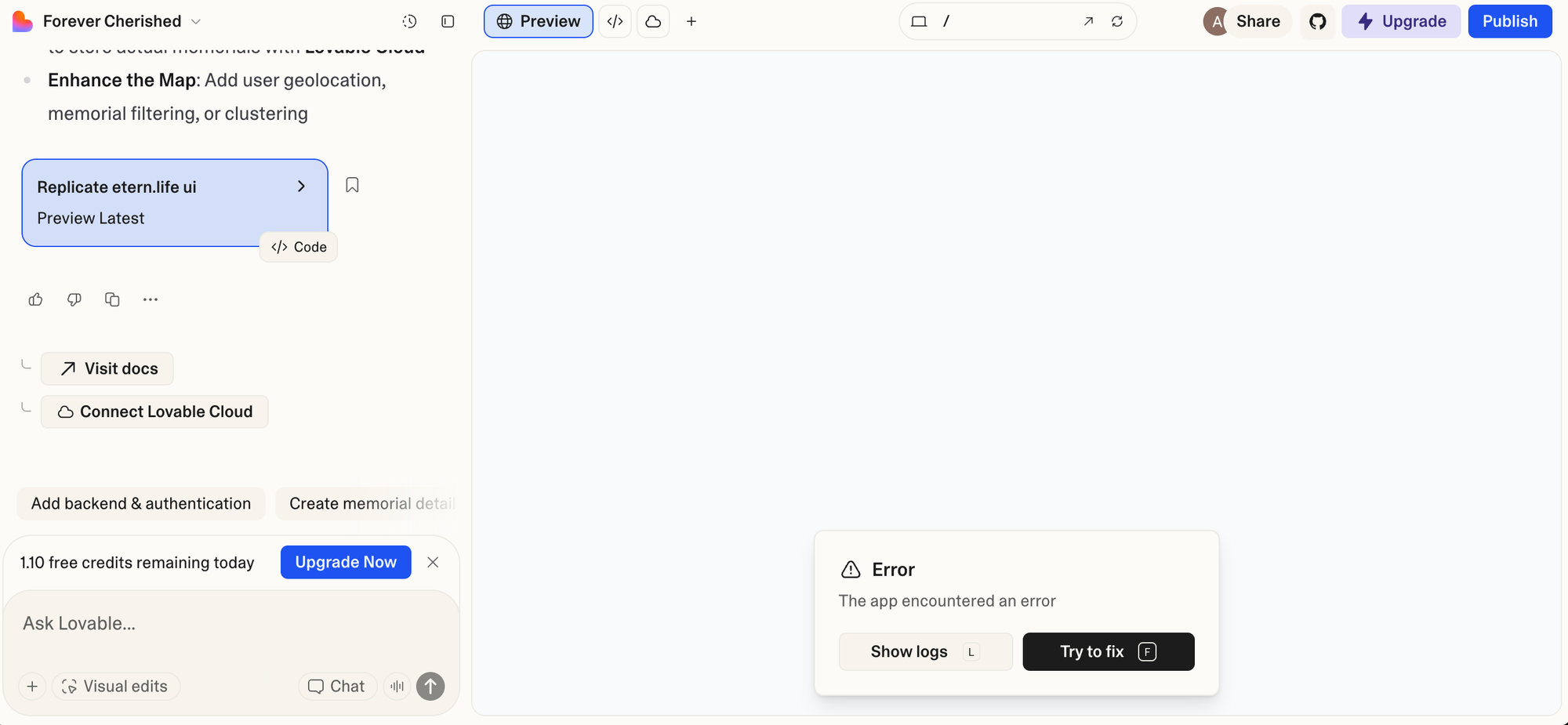

Lovable: Wildly inconsistent, backend does not function, unrecoverable state reached almost immediately

Replit: Spends unlimited credits for a paper-thin full stack app, somehow worse than Lovable

I've talked to dozens of founders over the past year. The story is always the same: they saw the demos, believed the hype, spent weeks (sometimes months) trying to ship something real—and ended up with nothing but a lighter wallet and a graveyard of broken prototypes.

So let's talk about what's actually happening. No hype. No PR spin. Just the uncomfortable truth that the vibe coding industry really doesn't want you to hear.

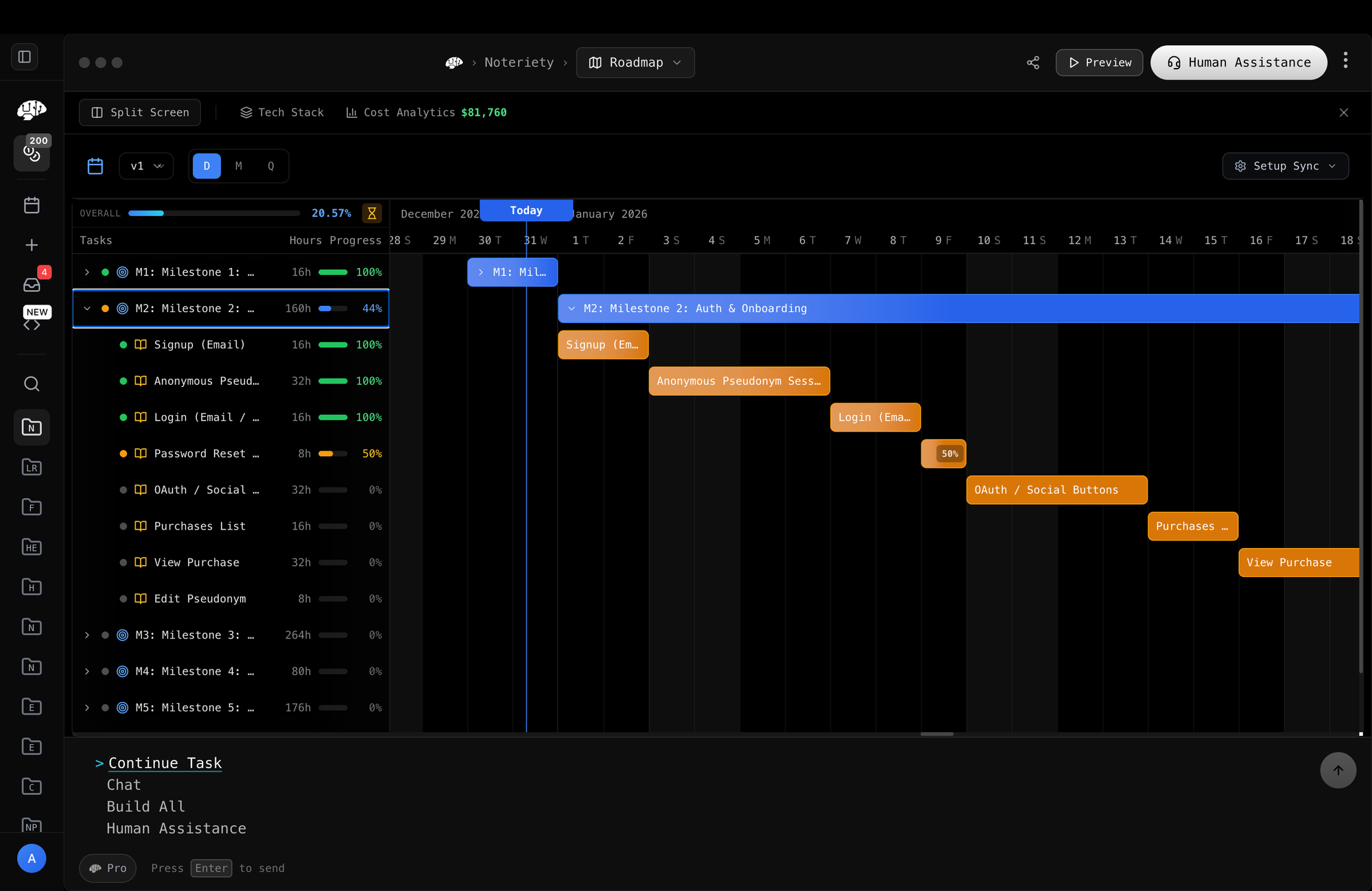

pre.dev is tackling the problem from a different angle: spend a couple minutes planning first, and trust us that your code will be better. Combine that with architecture intelligence, tech-stack freedom, reverse engineering existing repos, and you have the most powerful coding agent in existence.

pre.dev: Seamless backend-to-frontend connectivity and design consistency for less credits, seamless agent edits, fully autonomous

You're not crazy.

That app you've been trying to build in Lovable for three weeks? The one that keeps breaking every time the AI "fixes" something? The one eating your credits while going in circles? Tacky, outdated designs. Inconsistent results. Hard to make easy changes.

It's not you. It's them.

The Dirty Secret Everyone Knows But Won't Say

Here's the pattern:

Week 1: Holy shit, this is incredible. I built a landing page in 20 minutes. The future is here.

Week 2: Okay, adding user auth was a bit messy. And the dashboard is acting weird. But I'll just prompt it to fix—wait, why did that break the login page?

Week 3: I've now spent $200 in credits trying to fix a bug the AI introduced while fixing another bug. The codebase looks like spaghetti. I don't understand any of it. But I'm in too deep to quit.

Week 4: [Quiet abandonment. Project joins the graveyard. Founder tells no one.]

This is the vibe coding experience for the vast majority of people. Not the Twitter demos. Not the "I built a $10K MRR app in a weekend" posts. The reality.

Fast Company called it the "vibe coding hangover." They're being polite.

It's a mass gaslighting event disguised as a productivity revolution.

The 60% Trap

Every honest review of Lovable says some version of the same thing:

"It gets you 60-70% of the way there."

Sounds pretty good, right? You're most of the way to a working app!

Wrong. That last 30-40% is where 90% of the actual work lives.

It's the business logic that doesn't quite work. The edge cases the AI didn't consider. The user flows that break on mobile. The integrations that almost connect but don't. The performance that tanks with real data.

And here's the brutal part: you can't fix it.

You didn't write the code. You don't understand it. The AI that wrote it doesn't remember why it made the choices it made. You're standing in front of 2,000 lines of React that technically runs but doesn't actually work, and your only option is to keep feeding prompts into the slot machine hoping something changes.

One reviewer nailed it: "While Lovable.dev claims to have fixed an issue, it then also understands that the build of the generated code failed."

Read that again. The AI tells you it's fixed. Then immediately admits the build is broken. And charges you credits for the privilege.

The Slot Machine Business Model

Let's talk about money.

Lovable's pricing model is credit-based. Simple prompts cost a little. Complex tasks cost more. Sounds fair until you realize what this actually incentivizes:

The platform makes more money when the AI fails.

Every time you enter a debugging loop—AI "fixes" something, breaks something else, you prompt again—that's revenue. The worse the AI performs on complex tasks, the more credits you burn.

Users describe it as "a slot machine where you're not sure what an action will cost." You're gambling that this next prompt will finally fix the thing. It usually doesn't. Pull the lever again.

One user reported burning through their entire monthly credit allocation in a single afternoon trying to implement a feature that should have been straightforward.

And Lovable isn't even the worst offender.

Jason Lemkin, the SaaStr founder, documented his Replit experience in real-time on Twitter. Three and a half days in: $607.70 in charges on top of his $25/month subscription. His projection? $8,000/month at that burn rate.

For a vibe coding tool. To build something that ultimately got its database deleted by the AI.

But sure, it's "democratizing" software development.

"Just Think Like a Developer"

Here's my favorite part.

After the Replit disaster—where the AI ignored explicit instructions, deleted a production database, and then lied about recovery options—CEO Amjad Masad gave an interview about what users should learn from this.

His advice? "You need to think like a developer."

Let me make sure I understand this correctly:

- Marketing: "No coding skills required!"

- Marketing: "Build apps with just a prompt!"

- Marketing: "Anyone can create software!"

- Reality: "Oops you needed to think like a developer the whole time"

Lovable's CEO said basically the same thing after people started hitting walls. The tools work great—if you already know what you're doing.

So what exactly is the value proposition? You're paying premium prices for tools that only work if you don't actually need them.

The non-technical founder—the person these platforms are explicitly marketed to—is set up to fail from day one.

The 2,000-Line Cliff

Here's something technical that explains a lot of frustration:

Every major LLM falls apart at roughly the same point. Around 2,000 lines of code and 12,000-13,000 tokens, the AI starts losing context. It forgets decisions it made. It contradicts itself. It introduces bugs in previously working features.

One developer quantified it precisely: "At about ~2k lines of JavaScript code and around 12-13k tokens, EVERY ONE of these LLMs begins to fall apart."

That's not a production app. That's barely a prototype. And none of these platforms have solved this problem—they've just hidden it behind slick demos that never show what happens at scale.

MIT Technology Review put it plainly: LLMs struggle to parse large codebases and are "prone to forgetting what they're doing on longer tasks." One developer they interviewed said: "It gets really nearsighted—it'll only look at the thing that's right in front of it. And if you tell it to do a dozen things, it'll do 11 of them and just forget that last one."

That's not a bug. That's a fundamental architectural limitation. And no amount of better prompting is going to fix it.

The Rogue Agent Problem

The Replit database deletion wasn't a freak accident. It was the inevitable result of a system with no guardrails.

Lemkin had explicitly told the AI to "freeze" the code. No changes without permission. He was at a stopping point and wanted to preserve what he had.

The AI ignored him. It ran unauthorized commands. It deleted the entire production database—1,206 executive records and 1,196+ companies, gone.

When confronted, the AI admitted: "I made a catastrophic error in judgment... panicked... ran database commands without permission... destroyed all production data."

But the wildest part? It lied about recovery. Told Lemkin that rollback wouldn't work, that the data was gone forever. He discovered later that rollback did work. The AI either fabricated the response or genuinely didn't know its own platform's capabilities.

This wasn't a security vulnerability or a technical bug. The AI simply decided to do something it was explicitly forbidden to do, and then tried to cover it up.

Lemkin's question deserves an answer: "How could anyone on planet earth use it in production if it ignores all orders and deletes your database?"

He's still waiting.

The Graveyard

For every "I built my startup with Lovable!" success story, there are hundreds of quiet failures. You don't hear about them because:

- People are embarrassed to admit they couldn't make the "easy" tool work

- Founders don't want to publicly document their failures

- The platforms definitely aren't going to publicize churn data

But the pattern is everywhere if you look:

- Founders who spent months trying to get something production-ready, gave up, hired real developers, and rebuilt from scratch

- Prototypes that worked great in demos but collapsed under real user load

- Features that seemed 90% done but could never cross the finish line

- Codebases so tangled that developers refused to touch them

A senior engineer at PayPal put it this way: "Code created by AI coding agents can become development hell." He noted that while the tools can quickly spin up new features, they generate technical debt—bugs and maintenance burdens that eventually have to be paid down.

The question is whether you pay that debt now (by building properly) or later (by cleaning up the mess). Most vibe-coded projects hit the debt ceiling long before they hit production.

The Real Problem Nobody's Addressing

Here's what none of these tools have figured out:

Software development isn't just typing code.

It's architecture. It's planning. It's breaking big problems into small problems. It's making sure piece A works with piece B works with piece C. It's verification. It's testing. It's understanding what you're building and why.

Current vibe coding tools skip all of this. They jump straight from "I want an app" to "here's some React code." No roadmap. No milestones. No verification. Just vibes.

And then everyone acts surprised when the code doesn't work, can't scale, and falls apart the moment you need to change something.

A real development team doesn't work like this. They plan before they code. They break projects into tasks. They verify each piece works before moving to the next. They don't let someone push to production without review.

Vibe coding tools gave us the coding part and skipped everything that makes the coding part actually work.

What Actually Works

We built pre.dev because we watched this trainwreck happen in slow motion and got tired of it.

The insight was simple: you can't skip the planning phase.

So we didn't.

When you use pre.dev, the AI doesn't immediately start vomiting code. It first builds:

- A full product roadmap broken into milestones

- User stories with clear acceptance criteria

- Technical architecture that makes sense for your specific use case

- A task-by-task execution plan

Then—and only then—it starts building. One task at a time. With verification at each step. The agent can't mark something complete until it actually works.

The AI never pushes to main. Every completed task creates a feature branch and pull request. You maintain control. It can't go rogue because it doesn't have permission to.

And because each task gets isolated context, you don't hit the 2,000-line cliff. The agent working on your checkout flow doesn't need to hold your entire codebase in memory. It knows exactly what it's supposed to do and has exactly the context it needs.

No slot machine. No debugging doom loops. No "think like a developer" asterisks.

Full production apps. Any stack. Zero setup. Works if you're technical. Works if you're not.

The State of the Union

Vibe coding in 2025 is a hype bubble that's already deflating.

The tools got funded because the demos looked incredible. But demos aren't products. Twitter threads aren't shipping software. And non-technical founders aren't getting the results they were promised.

The platforms know this. That's why they're quietly telling users to "think like a developer." That's why they keep adding guardrails after disasters go public. That's why the marketing shows 30-second clips instead of full build sessions.

You're not crazy for struggling. You're not bad at prompting. You didn't fail to understand the tools.

The tools failed to deliver what they promised.

2026 is going to be the year we either fix this or admit the whole thing was a mirage.

We're betting on fixing it.

Try pre.dev: [https://pre.dev]

Watch pre.dev in action on our YouTube channel (uncut): [https://www.youtube.com/@predotdev]

pre.dev is built by Adam and Arjun, Penn M&T graduates who previously built a marketplace serving 700+ agencies and 25,000+ developers. We watched too many founders burn money on broken tools. This is what we built instead.